My

12" iBook G4 is celebrating its 8th birthday today! Time for a little present. How about R 2.14.0?

The iBook is still in daily use, mostly for browsing the web, writing e-mails and this blog; and I still use it for R as well. For a long time it run R 2.10.1, the last PowerPC binary version available on

CRAN for

Mac OS 10.4.11 (Tiger).

But, R 2.10.1 is a bit dated by now and for the development of my

googleVis package I require at least R 2.11.0. So I decided to try installing the most recent version from source, using Xcode 2.5 and

TeXLive-2008.

R 2.14.0 is expected to be released on Monday (31st October 2011). The pre-release version is already available on

CRAN. I assume that the pre-release version is pretty close to the final version of R 2.14.0, so why wait?

It was actually surprisingly easy to compile the command line version of R from sources. The GUI would be a nice to have, but I am perfectly happy to run R via the Terminal, xterm and Emacs. However, it shouldn't be a surprise that running

configure, make, make install on a 800 Mhz G4 with 640MB memory does take its time.

Below you will find the building details. Please feel free to get in touch with me, if you would like access to my

Apple Disk Image (dmg) file. You find my e-mail address in the maintainer field of the

googleVis package.

Building R from source on Mac OS 10.4 with Xcode 2.5 (gcc-4.0.1)

Before you start, make sure you have all the Apple Developer Tools installed. I have

Xcode installed in

/Developer/Applications.

From the

pre-release directory on CRAN I downloaded the file R-rc_2011-10-28_r57465.tar.gz.

After I downloaded the file I extracted the archive and run the configure scripts to build the various Makefiles. To do this, I opened the Terminal programme (it's in the Utilities folder of Applications), changed into the directory in which I stored the tar.gz-file and typed:

tar xvfz R-rc_2011-10-28_r57465.tar.gz

cd R-rc

./configure

This process took a little while (about 15 minutes) and at the end I received the following statement:

R is now configured for powerpc-apple-darwin8.11.0

Source directory: .

Installation directory: /Library/Frameworks

C compiler: gcc -std=gnu99 -g -O2

Fortran 77 compiler: gfortran -g -O2

C++ compiler: g++ -g -O2

Fortran 90/95 compiler: gfortran -g -O2

Obj-C compiler: gcc -g -O2 -fobjc-exceptions

Interfaces supported: X11, aqua, tcltk

External libraries: readline, ICU

Additional capabilities: NLS

Options enabled: framework, shared BLAS, R profiling, Java

Recommended packages: yes

With all the relevant Makefiles in place I could start the build process via:

make -j8

Now I had time for a cup of tea, as the build took about one hour. Finally, to finish the installation, I placed the new R version into its place in

/Library/Frameworks/ by typing:

sudo make install

Job done. Let's test it:

Grappa:~ Markus$ R

R version 2.14.0 RC (2011-10-28 r57465)

Copyright (C) 2011 The R Foundation for Statistical Computing

ISBN 3-900051-07-0

Platform: powerpc-apple-darwin8.11.0 (32-bit)

R is free software and comes with ABSOLUTELY NO WARRANTY.

You are welcome to redistribute it under certain conditions.

Type 'license()' or 'licence()' for distribution details.

Natural language support but running in an English locale

R is a collaborative project with many contributors.

Type 'contributors()' for more information and

'citation()' on how to cite R or R packages in publications.

Type 'demo()' for some demos, 'help()' for on-line help, or

'help.start()' for an HTML browser interface to help.

Type 'q()' to quit R.

> for(i in 1:8) print("Happy birthday iBook!")

[1] "Happy birthday iBook!"

[1] "Happy birthday iBook!"

[1] "Happy birthday iBook!"

[1] "Happy birthday iBook!"

[1] "Happy birthday iBook!"

[1] "Happy birthday iBook!"

[1] "Happy birthday iBook!"

[1] "Happy birthday iBook!"

The installation of additional packages worked straightforward via

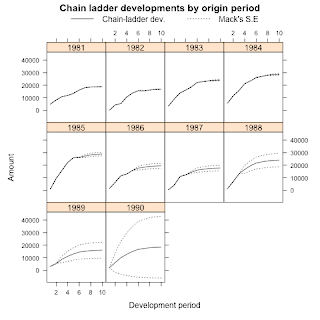

install.packages(c("vector of packages")), though it took time, as everything was build from sources. This it what it looks like on my iBook G4 today:

> installed.packages()[,"Version"]

ChainLadder GillespieSSA Hmisc ISOcodes KernSmooth MASS

"0.1.5-0" "0.5-4" "3.8-3" "2011.07.31" "2.23-6" "7.3-16"

Matrix R.methodsS3 R.oo R.rsp R.utils RColorBrewer

"1.0-1" "1.2.1" "1.8.2" "0.6.2" "1.8.5" "1.0-5"

RCurl RJSONIO RUnit Rook XML actuar

"1.6-10" "0.96-0" "0.4.26" "1.0-2" "3.4-3" "1.1-2"

base bitops boot brew car class

"2.14.0" "1.0-4.1" "1.3-3" "1.0-6" "2.0-11" "7.3-3"

cluster coda codetools coin colorspace compiler

"1.14.1" "0.14-4" "0.2-8" "1.0-20" "1.1-0" "2.14.0"

data.table datasets digest flexclust foreign gam

"1.7.1" "2.14.0" "0.5.1" "1.3-2" "0.8-46" "1.04.1"

ggplot2 googleVis grDevices graphics grid iterators

"0.8.9" "0.2.10" "2.14.0" "2.14.0" "2.14.0" "1.0.5"

itertools lattice lmtest mclust methods mgcv

"0.1-1" "0.20-0" "0.9-29" "3.4.10" "2.14.0" "1.7-9"

modeltools mvtnorm nlme nnet parallel party

"0.2-18" "0.9-9991" "3.1-102" "7.3-1" "2.14.0" "0.9-99994"

plyr proto pscl reshape rpart sandwich

"1.6" "0.3-9.2" "1.04.1" "0.8.4" "3.1-50" "2.2-8"

spatial splines statmod stats stats4 strucchange

"7.3-3" "2.14.0" "1.4.13" "2.14.0" "2.14.0" "1.4-6"

survival systemfit tcltk tools utils vcd

"2.36-10" "1.1-8" "2.14.0" "2.14.0" "2.14.0" "1.2-12"

zoo

"1.7-5"

Update (3 June 2012)

Just updated my R installation to R-2.15.0 and the above procedure still worked. But I had to be patient. It took at least an hour to compile R and the core packages.

R version 2.15.0 Patched (2012-06-03 r59505) -- "Easter Beagle"

Copyright (C) 2012 The R Foundation for Statistical Computing

ISBN 3-900051-07-0

Platform: powerpc-apple-darwin8.11.0 (32-bit)

Update (1 June 2013)

Just updated my R installation to R-3.0.1 and the above procedure still worked. The iBook will be 10 years old soon and is still going strong. Not bad for such an old laptop.

R version 3.0.1 (2013-05-16) -- "Good Sport"

Copyright (C) 2013 The R Foundation for Statistical Computing

Platform: powerpc-apple-darwin8.11.0 (32-bit)